Many forward-thinking people are wondering and asking machine learning experts about the ethical implications of machine learning. Common questions include: How will machines know what the right thing to do is? Will machines ever be able to behave ethically? Will robots be able to blend into society seamlessly?

In this post, we explore these questions and how machines might be able to behave ethically.

What are ethics?

According to the Markkula Center for Applied Ethics, ethics is based on well-founded standards of right and wrong that prescribe what humans ought to do, usually in terms of rights, obligations, benefits to society, fairness, or specific virtues. The Internet Encyclopedia of Philosophy states that ethics “involves systematizing, defending, and recommending concepts of right and wrong behavior.”

What is clear from these definitions is that ethics are not like laws and are not encoded as such. Instead, people develop their ethics through their life experiences with other people, and different people will come to have different ethics. It is widely known that different geographical areas can have different ethical norms.

How will machines have ethics?

If that is the case, asking the question of how computers would develop their own ethics becomes an obvious and important question. After all, computers do not have the luxury of growing up among people and learning from their experiences. Computers are usually programmed using programming languages that have rigid algorithms. In other words, humans must tell computers how to behave.

In the era of machine learning, however, computers are also being taught to perform certain tasks in the same way as humans are taught, by showing them examples of what to do and what not to do. The question is, when it comes to ethics, where do we get these rules that we can teach our computers?

The answer, perhaps obviously, is that we have to encode our own human ethics into lists of rights and wrongs. Without having such a list, we will not be able to teach our computers. How do we get such a list?

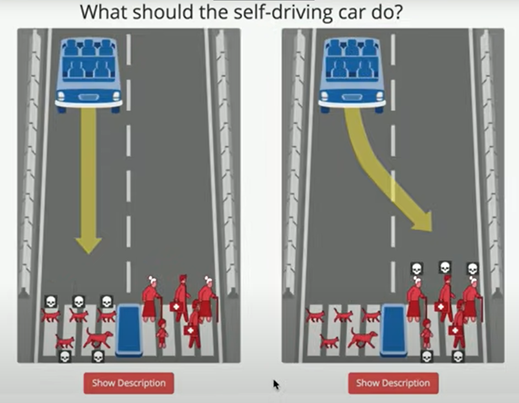

The Massachusetts Institute of Technology (MIT) is conducting a study on the human perspective of ethical and moral decisions made by machine intelligence, such as self-driving cars. The experiment shows moral dilemmas, where a driverless car must choose the lesser of two evils, such as killing two passengers or five pedestrians. Anyone can be a participant in the experiment by visiting https://www.moralmachine.net/

Machine Learning Ethics at MIT

We must keep in mind that people have diverse sets of ethics on different continents, countries, and locales. We may need to conduct these experiments locally to ensure that our future robotic companions will behave nicely.

The Future of Machine Ethics

In 2014 Stephen Hawking famously told the BBC: “The development of full artificial intelligence could spell the end of the human race.” Fortunately, the world-renowned physicist only mentioned one of several potential outcomes. Since we humans are the creators of our robots and self-driving cars, we are ultimately responsible for programming them or teaching them right from wrong.

We have identified the process through which we can understand the ethical behavior of humans, encode them into clearly defined rules, and teach them to our computers. Some machine learning experts believe that making machines ethical is a straightforward engineering process complete with the usual Q&A that will lead to the desired outcome of ethical AI.

We Can Help

At Sidespin Group we have seen our fair share of machine learning projects that have gone astray for the lack of proper expertise on board. We are here to help with machine learning expertise and can advise on how best to go about a project to ensure success. For help with AI/ML strategy please contact Sidespin Group’s machine learning experts for guidance.