AI-Generated Code Faces Legal Scrutiny: The Role of Software and AI Expert Witnesses

A recent class-action lawsuit against GitHub, Microsoft, and OpenAI has brought significant attention to the legal challenges surrounding AI-generated code, particularly with tools like GitHub Copilot, powered by OpenAI Codex. This case, filed in a U.S. federal court, questions the legality of these platforms under open-source licensing laws and raises broader implications for artificial intelligence in software development. A software expert witness or an AI expert witness can play a critical role in navigating the technical and legal complexities of such disputes.

Understanding GitHub Copilot and OpenAI Codex

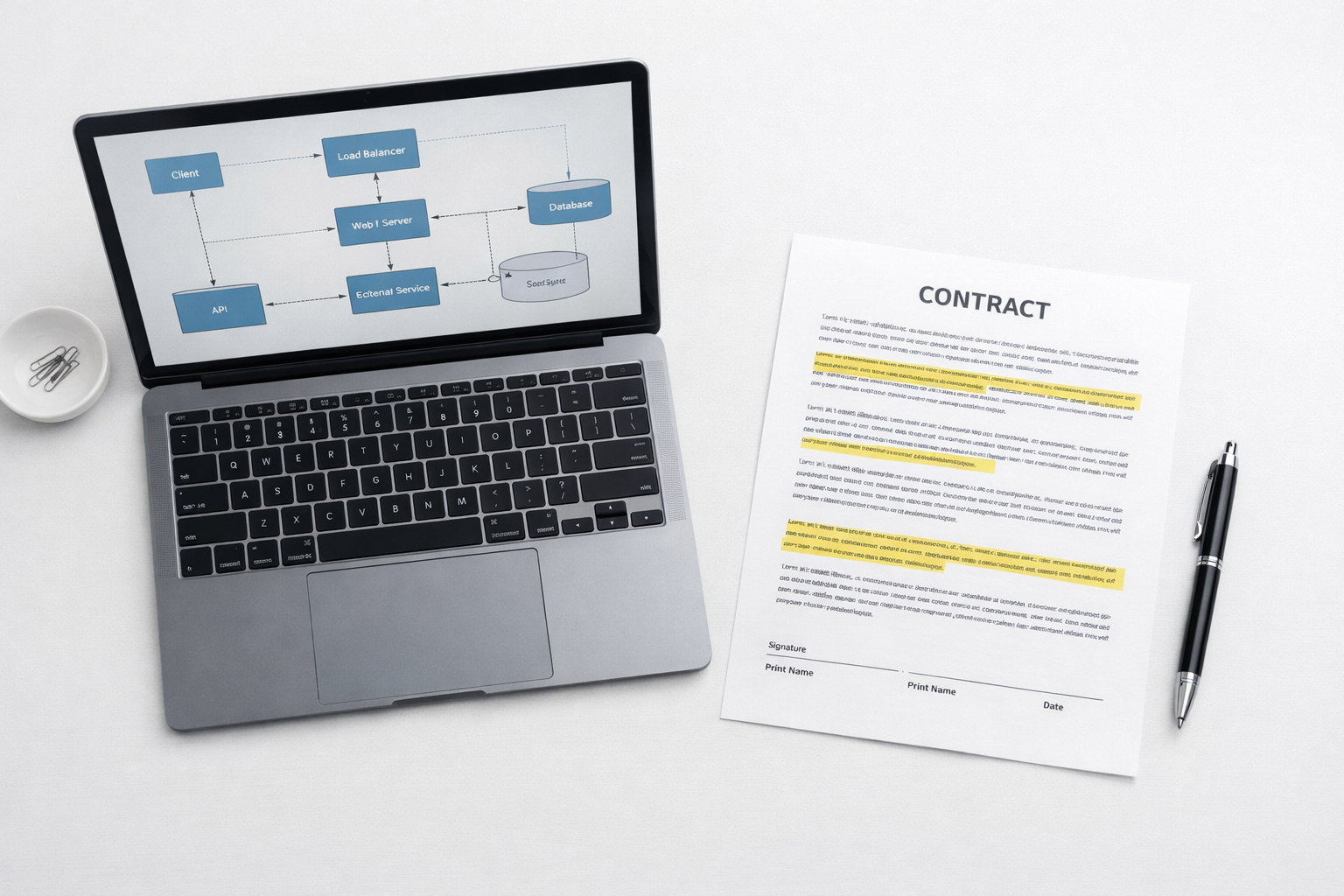

GitHub Copilot is a cloud-based coding assistant that leverages AI to suggest code snippets based on existing code and comments hosted on GitHub. Functioning like an advanced autocomplete tool, Copilot analyzes vast repositories of code to provide developers with efficient programming solutions. For instance, if a developer starts writing a function for a sorting algorithm, Copilot can propose a complete implementation, saving time and effort. However, the lawsuit alleges that this process may violate open-source licenses, such as MIT, GPL, and Apache, which often require proper attribution.

The Core of the Lawsuit: Copyright and Fair Use

The lawsuit centers on whether GitHub Copilot’s use of open-source code constitutes a violation of copyright law or falls under the “fair use” doctrine. A source code review expert witness can determine whether specific code suggestions replicate protected works or constitute transformative use. Fair use allows limited use of copyrighted material without permission for purposes like criticism, teaching, or research. However, plaintiffs, led by programmer and lawyer Matthew Butterick, argue that Copilot’s reliance on billions of lines of human-written code amounts to software piracy, disregarding open-source license requirements. Butterick emphasizes that AI systems should be held to the same standards as human developers, and widespread license violations cannot be dismissed as an inevitable byproduct of AI innovation. A software expert witness can provide clarity on how these licenses are implemented and enforced in practice.

Broader Implications for AI and Open-Source Development

The outcome of this lawsuit could redefine the boundaries of copyright law for AI-generated code and influence how developers use tools like Copilot or Amazon’s CodeWhisperer. Some experts challenge GitHub’s fair use claim, arguing it does not address issues like breach of contract, privacy concerns, or violations under the Digital Millennium Copyright Act (DMCA). Others contend that the responsibility lies with developers who misuse copyrighted code, not the AI tool itself. An AI expert witness can help dissect the technical mechanisms of these platforms, explaining how machine learning models are trained and whether they comply with legal standards.

The case also raises questions about the ethics of training AI models on publicly available internet data while commercializing the results. This issue extends beyond GitHub, as platforms like Stack Overflow have banned ChatGPT-generated content, citing its reliance on human-curated data without proper attribution. These developments highlight the need for expert testimony to bridge the gap between complex technology and legal frameworks.

The Role of Software Expert Witnesses in AI-Related Litigation

Navigating lawsuits involving AI and software requires deep technical expertise. A software expert witness can analyze codebases, licensing agreements, and development practices to determine compliance with legal standards. Similarly, an AI expert witness can evaluate the training processes of machine learning models, shedding light on whether tools like Copilot infringe on intellectual property rights. At Sidespin Group, our team offers specialized knowledge in copyright infringement, trade secret misappropriation, and patent disputes. We provide comprehensive technical insights to support legal teams and are available for free consultations to discuss your case.

For more information on open-source licensing, visit the Open Source Initiative. To understand the fair use doctrine, explore resources at the U.S. Copyright Office. For updates on AI and copyright law, check TechCrunch for the latest industry news.

Written by

Related Insights

Discuss your Case

- info@sidespingroup.com

- (800) 510-6844

- Monday – Friday

- 8am – 6pm PT

- 11am – 9pm ET